Why Annotation Quality Matters

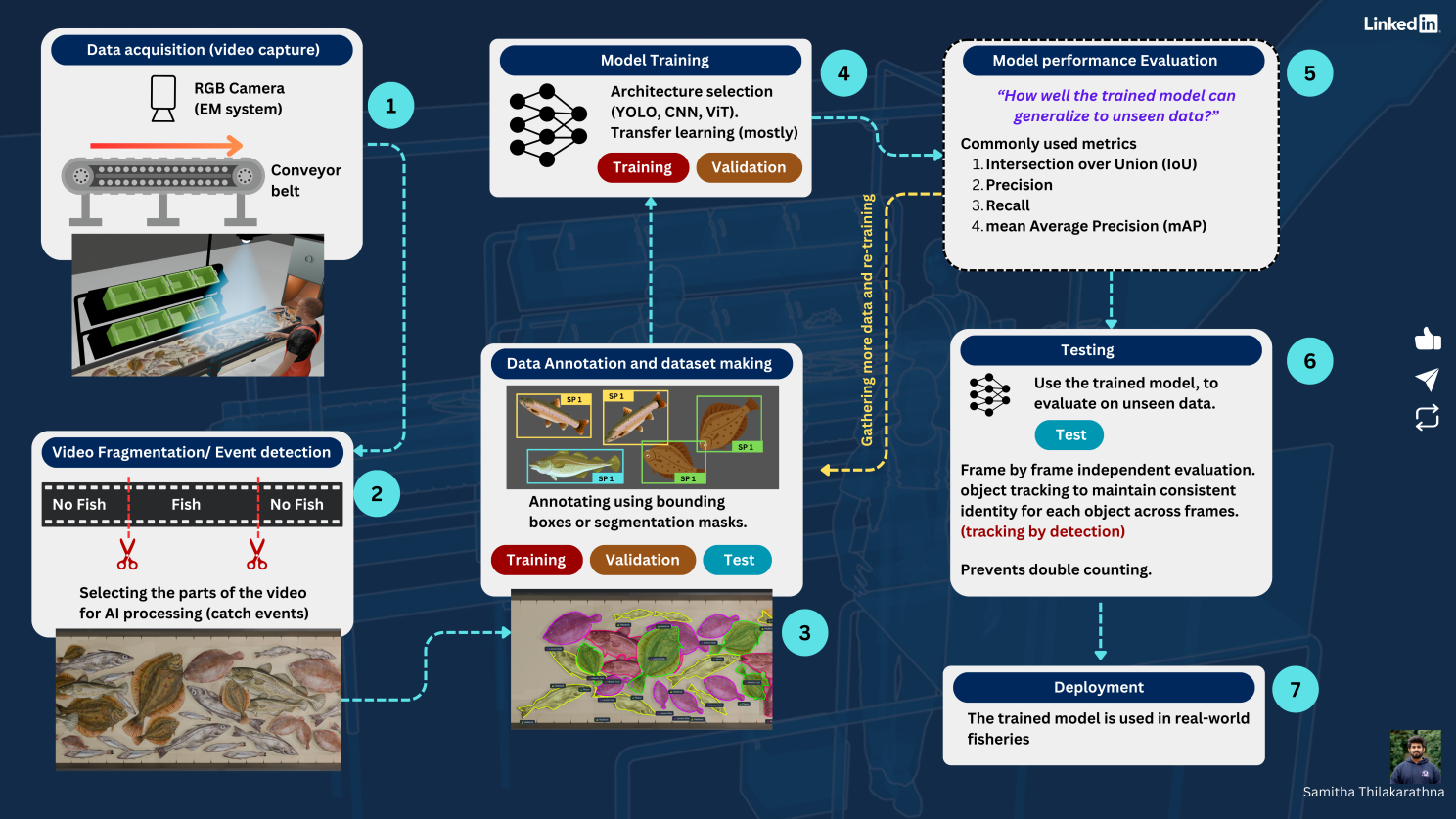

Creating robust deep learning models for fish species identification and re-identification depends critically on the quality of your labels. Poorly or inconsistently annotated datasets lead to model confusion, while precise, standardized annotations accelerate both learning and real-world application success.

Choosing the Right Tool

Several annotation tools are available for computer vision:

- CVAT: Excellent for bounding boxes, polygons, multi-frame tracking

- Label Studio: Flexible for images, video, and even text/audio

- VGG Image Annotator: Lightweight, runs in-browser with zero setup

- makesense.ai: Simple web tool with various export options

Your workflow and desired output format will often dictate the best choice.

Best Practices for Fish Image Annotation

- Define a clear labeling protocol: what qualifies as the object/fish, permissible occlusion, size cutoffs

- Use consistent label/class names (e.g., "Atlantic mackerel" not "mackerel", "mackerel - adult")

- Prefer polygons for fine-grained tasks, but use bounding boxes for simpler tasks

- Quality control: have a second reviewer verify a subset of images

- Regularly export and back up your dataset in multiple formats (COCO, YOLO, Pascal VOC, etc.)

Exporting Your Dataset

Most tools allow you to export in popular formats. For deep learning, COCO (instance segmentation and detection) and YOLO (object detection) are most common. Always keep your source (JSON/XML) for reprocessing.